爬取用户提交关键字在博客园搜索出来的文章,一页十篇,共50页,获取标题,内容,发表时间,推荐量,评论量,浏览量

写入sql server数据库,代码如下;

import requests from lxml import etree import pymssql import time # 连接sql server数据库 conn = pymssql.connect(host='127.0.0.1', user='sa', password='root', database='a', charset='utf8') cursor = conn.cursor() headers = { 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36', 'cookie': '_ga=GA1.2.789692814.1575245968; _gid=GA1.2.90574348.1575245968; __gads=ID=d0b3d037d343ea7f:T=1575246122:S=ALNI_MYb3-Nqsf59wLf_5kAyYqYllV7EFA; _gat=1; .Cnblogs.AspNetCore.Cookies=CfDJ8DeHXSeUWr9KtnvAGu7_dX-Wfut1-dgX_yW1t_fPBSG6ejwby5on7dPqagwvw_WdjyzxkSv4BwoUWPbClu4VNcySbHU5xW1f4vpuOB4NET3TigRH9T3mlgNwIWy7oqLFygXjQxNj2gkFzpDx7Yq8T7HJOmxg30lx50dN4ssnGTWVCTppMnHJT1NyfQs58HorucZThRwEjTxDMdcAI_VoGbd-EmMOUT9h-fLvnQ_hn4b8lQ9evYMG4n9nmmArBnhf3wNo-RKb7TgMCx6QUWWIbYXp2M2TjzG3uzbO3rnEljkTL1cVEB6My97ZQfjLRe27RbArxp4wltsXi4WkBcNTQAXyI2SpiFZYCcBZTxT_uC-Z5Phphjs-sl1_iu7sIR-8m0qysad-BuKdS6Qwvj5qlJt1JCJbi_WFH6Dzs_rgJvn0DfPQE50sAlHOs6Dhgqc7N-YDVqpSphJDRlRkIM6JBH8Pq6EZ8S0IRbZsdkIqiJ54CD-H5G5Hx9oATlEakAqDnWyZ4LlBVyu1wkne48R5usxkmITyZ1PDWwHC5pKRKxfelXDoR05REO4GDOXhXxG5XEZeYA1rWdJI7AKnIM5RM9Y; .CNBlogsCookie=E4793F450C4325E3C9EF21B78B1DE43F6258C9FD5951338859D96A5EC8795064AB518501755136F3A4CB1CE647EBD2CC352C1E9EBDC6E460B6320E9F62F083A52A635A4651A3D1082631D55FCE58E283B97D016E61DC411E094F6EA9A9CF9A59A292C16F' } """ 标题,内容,发表时间,推荐量,评论量,浏览量 """ # 写入数据库 def insert_sqlserver(key,data): try: cursor.executemany( "insert into {}(title,contents,create_time,view_count,comment_count,good_count) VALUES(%s,%s,%s,%s,%s,%s)".format(key),data ) conn.commit() except Exception as e: print(e,'写入数据库时错误') # 获取数据 def get_all(key,url): for i in range(1,51): next_url = url+'&pageindex=%s'%i res = requests.get(next_url,headers=headers) response = etree.HTML(res.text) details = response.xpath('//div[@class="searchItem"]') data = [] print(next_url) for detail in details: try: detail_url = detail.xpath('./h3/a[1]/@href') good = detail.xpath('./div/span[3]/text()') comments = ['0' if not detail.xpath('./div/span[4]/text()') else detail.xpath('./div/span[4]/text()')[0]] views = ['0' if not detail.xpath('./div/span[5]/text()') else detail.xpath('./div/span[5]/text()')[0]] res = requests.get(detail_url[0],headers=headers) response = etree.HTML(res.text) title = response.xpath('//a[@id="cb_post_title_url"]/text()')[0] contents = response.xpath('//div[@id="post_detail"]') if not response.xpath('//div[@class="postbody"]') else response.xpath('//div[@class="postbody"]') content = etree.tounicode(contents[0],method='html') create_time = response.xpath('//span[@id="post-date"]/text()')[0] print(detail_url[0],good[0],comments[0],views[0],title,create_time) data.append((title,content,create_time,views[0],comments[0],good[0])) time.sleep(2) except Exception as e: print(e,'获取数据错误') insert_sqlserver(key,data) # //*[@id="searchResult"]/div[2]/div[2]/h3/a # 主函数并创建数据表 def main(key,url): cursor.execute(""" if object_id('%s','U') is not null drop table %s create table %s( id int not null primary key IDENTITY(1,1), title varchar(500), contents text, create_time datetime, view_count varchar(100), comment_count varchar(100), good_count varchar(100) ) """%(key,key,key)) conn.commit() get_all(key,url) if __name__ == '__main__': key = 'python' url = 'https://zzk.cnblogs.com/s?t=b&w=%s'%key main(key,url) conn.close()

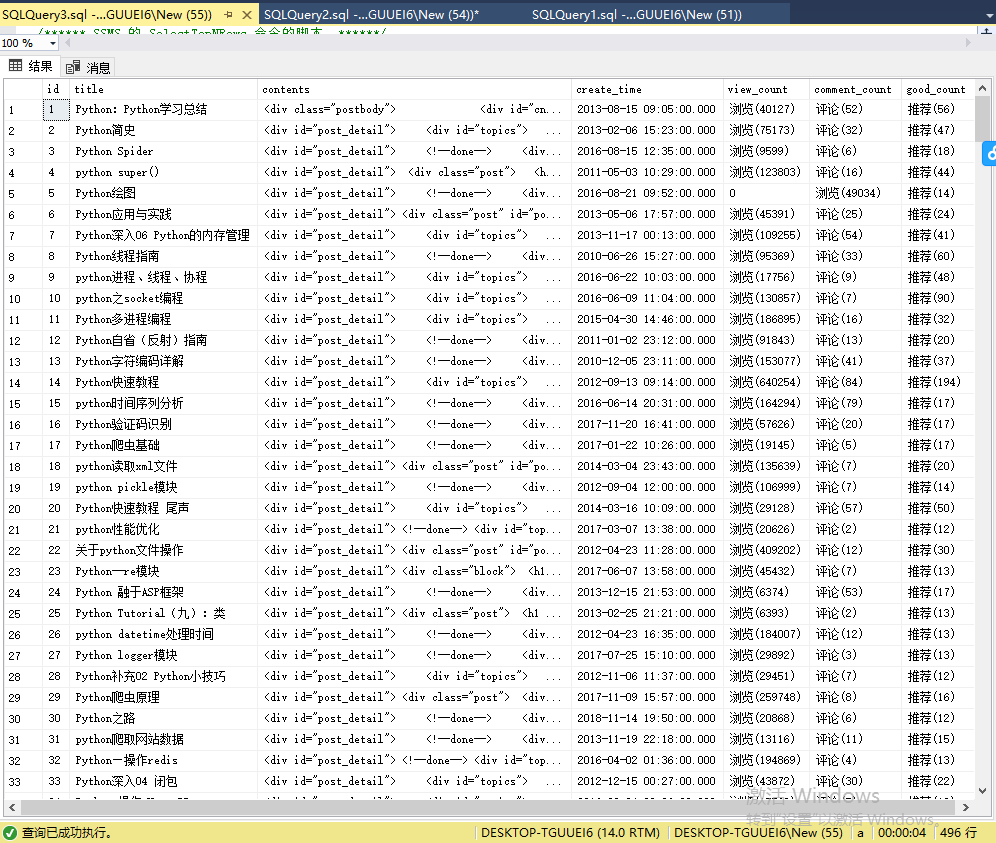

查看数据库内容:

done